Health

AI Pilots in Healthcare: Hidden Costs Total Millions

Healthcare systems across the United States are facing significant financial burdens due to the proliferation of “free” AI pilots. A recent report from the Massachusetts Institute of Technology (MIT) indicates that approximately 95 percent of generative AI pilots fail. This trend highlights what MIT refers to as the “GenAI Divide,” where many organizations utilize generic AI tools that may impress in demonstrations but falter in real-world applications.

Understanding the Financial Impact of AI Pilots

Many U.S. health systems have been inundated with offers for free trials from AI vendors. These trials often begin with enthusiasm from decision-makers, leading to initial approvals for pilot programs. However, the costs quickly mount as teams dedicate valuable time and resources to these initiatives. In 2022, a study from Stanford University revealed that these so-called “free” models, which require additional custom data and training for clinical use, can cost upwards of $200,000. Despite this investment, they frequently fail to yield tangible improvements in patient care or cost savings.

The cumulative effect of these failures can lead to millions in wasted funds across numerous unsuccessful pilots. As AI technology has been positioned as a revolutionary solution for healthcare, the repeated failures erode trust and reinforce skepticism about its value. This skepticism is compounded by the fact that when AI is implemented effectively, it can alleviate clinician burnout and enhance workflow efficiency, according to the American Medical Association (AMA).

Strategies for Successful AI Implementation

To mitigate the risks associated with AI pilots, healthcare leaders must adopt a structured approach. The initial discipline necessary is in design. Before initiating another pilot, it is essential for leaders to clearly define the target audience, the specific problem the tool addresses, and its role within existing workflows. Crucially, leaders should articulate the reasons for adopting the technology—this understanding is vital for establishing measurable success criteria.

Equally important is the discipline in defining outcomes. Every pilot should start with a clear and measurable definition of success based on the organization’s priorities. This could include metrics such as reducing report turnaround times, minimizing administrative burdens, or improving patient access. For instance, an AI model aimed at identifying patients at risk for breast cancer must demonstrate its effectiveness in flagging risks and facilitating timely follow-ups.

Lastly, discipline in partnerships is critical. While it may be tempting to select the largest or most established vendor, size does not guarantee success. MIT emphasizes that generic AI tools often fail due to their inability to accommodate the complexities of healthcare workflows. Organizations that succeed will be those that collaborate with partners who understand their specific needs, help define outcomes, and share accountability for results.

Choosing the right AI solution is essential. A poor choice can result in a costly, self-directed project fraught with risks. Conversely, selecting the appropriate technology can pave the way for sustainable success in healthcare. AI does not fail due to deficiencies in the technology itself; it falters when decision-makers proceed without adequate frameworks, discipline, or appropriate partnerships.

The hidden costs associated with “free” AI pilots represent a significant challenge for healthcare systems. As the industry continues to explore the potential of AI, it is imperative to learn from past mistakes and implement more strategic approaches to avoid repeating the same costly lessons.

-

Business2 weeks ago

Business2 weeks agoIconic Sand Dollar Social Club Listed for $3 Million in Folly Beach

-

Politics2 weeks ago

Politics2 weeks agoAfghan Refugee Detained by ICE After Asylum Hearing in New York

-

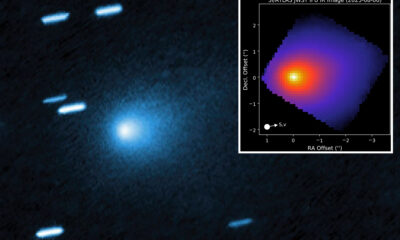

Science2 weeks ago

Science2 weeks agoInterstellar Object 3I/ATLAS Emits Unique Metal Alloy, Says Scientist

-

Health2 weeks ago

Health2 weeks agoPeptilogics Secures $78 Million to Combat Prosthetic Joint Infections

-

Science2 weeks ago

Science2 weeks agoResearchers Achieve Fastest Genome Sequencing in Under Four Hours

-

Lifestyle2 weeks ago

Lifestyle2 weeks agoJump for Good: San Clemente Pier Fundraiser Allows Legal Leaps

-

Health2 weeks ago

Health2 weeks agoResearcher Uncovers Zika Virus Pathway to Placenta Using Nanotubes

-

World2 weeks ago

World2 weeks agoUS Passport Ranks Drop Out of Top 10 for First Time Ever

-

Top Stories2 weeks ago

Top Stories2 weeks agoChicago Symphony Orchestra Dazzles with Berlioz Under Mäkelä

-

Business2 weeks ago

Business2 weeks agoSan Jose High-Rise Faces Foreclosure Over $182.5 Million Loan

-

Entertainment2 weeks ago

Entertainment2 weeks agoJennifer Lopez Addresses A-Rod Split in Candid Interview

-

World2 weeks ago

World2 weeks agoRegional Pilots’ Salaries Surge to Six Figures in 2025