Science

Unraveling Causation: The Challenge of Identifying True Links

Understanding the distinction between causation and correlation is increasingly vital across various fields, particularly in health policy and medical research. This complexity is underscored by questions that impact public health and policy, such as whether the use of acetaminophen is linked to an increased risk of autism or if homework genuinely enhances educational performance.

Determining causation remains a complex endeavor, as mere correlations can be misleading. For instance, both crime rates and ice cream consumption tend to rise during the summer months, but one does not cause the other. Instead, underlying factors like school vacations and warmer weather contribute to both trends. This example illustrates how confounding factors can obscure the true relationship between two variables.

Researchers often encounter similar challenges when exploring the effects of interventions or treatments. In educational settings, schools that assign more homework may also implement other practices that promote academic success, leading to misleading associations between homework and performance. This phenomenon is known as selection bias, where groups differ systematically in ways that can distort findings.

To navigate these complexities, many researchers rely on what is considered the “gold standard” for establishing causality: randomized controlled trials (RCTs). By randomly assigning participants to receive a specific treatment or not, researchers can control for preexisting differences, allowing for a clearer understanding of outcomes. However, RCTs are not always feasible due to ethical concerns or logistical challenges. For example, it would be unethical to randomly assign pregnant individuals to take or abstain from acetaminophen, given its established benefits.

Innovative Research Designs

In situations where randomization is impractical, researchers employ innovative designs to analyze non-randomized data. These approaches often utilize existing datasets such as electronic health records or large cohort studies like the Nurses Health Study. The goal is to minimize confounding by leveraging naturally occurring randomness or adjusting for observed confounding variables.

One effective method is known as instrumental variables design. This approach may involve randomly encouraging participants to increase their fruit and vegetable intake through incentives like coupons or educational messaging. Additionally, researchers might assess how varying access to grocery stores influences dietary habits, allowing for a clearer understanding of health outcomes.

Another strategy, termed difference-in-differences or comparative interrupted time series, compares groups before and after policy changes, such as alterations in access to medications. These studies benefit from publicly available data, often yielding robust insights when complemented by comparison groups that did not experience the policy change.

Cohort studies, including the Nurses Health Study, also utilize comparison group designs to adjust for various characteristics, thereby addressing potential confounding factors. Techniques like propensity score matching facilitate comparisons between individuals with similar backgrounds, enhancing the reliability of findings.

Building Evidence Over Time

The landscape of research designs is diverse, with each approach suitable for different contexts and research questions. Researchers are encouraged to familiarize themselves with a variety of methodologies to enrich their analytical toolkit. This diversity is particularly beneficial for complex causal questions that resist straightforward answers.

As researchers tackle intricate issues, such as the potential links between acetaminophen and autism, it is essential to recognize that understanding causation is an ongoing journey rather than a definitive endpoint. As noted by Cordelia Kwon, a Ph.D. student in health policy at Harvard University, and Elizabeth A. Stuart, a professor and chair of the Department of Biostatistics at the Johns Hopkins Bloomberg School of Public Health, the process of synthesizing evidence across studies necessitates careful consideration and input from experts in both substantive and methodological fields.

Ultimately, determining causation is an intricate process that demands rigorous research and a willingness to embrace uncertainty. As new questions arise and existing answers evolve, the commitment to thorough investigation will foster a deeper understanding of the causal relationships that underpin health and policy decisions.

-

Business1 week ago

Business1 week agoIconic Sand Dollar Social Club Listed for $3 Million in Folly Beach

-

Politics1 week ago

Politics1 week agoAfghan Refugee Detained by ICE After Asylum Hearing in New York

-

Health1 week ago

Health1 week agoPeptilogics Secures $78 Million to Combat Prosthetic Joint Infections

-

Science1 week ago

Science1 week agoResearchers Achieve Fastest Genome Sequencing in Under Four Hours

-

Lifestyle1 week ago

Lifestyle1 week agoJump for Good: San Clemente Pier Fundraiser Allows Legal Leaps

-

Health1 week ago

Health1 week agoResearcher Uncovers Zika Virus Pathway to Placenta Using Nanotubes

-

World1 week ago

World1 week agoUS Passport Ranks Drop Out of Top 10 for First Time Ever

-

Entertainment1 week ago

Entertainment1 week agoJennifer Lopez Addresses A-Rod Split in Candid Interview

-

World1 week ago

World1 week agoRegional Pilots’ Salaries Surge to Six Figures in 2025

-

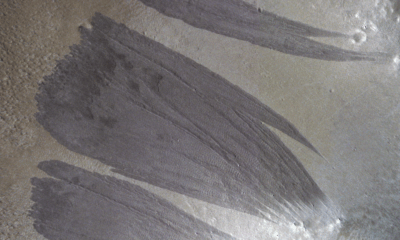

Science1 week ago

Science1 week agoMars Observed: Detailed Imaging Reveals Dust Avalanche Dynamics

-

Top Stories6 days ago

Top Stories6 days agoChicago Symphony Orchestra Dazzles with Berlioz Under Mäkelä

-

World1 week ago

World1 week agoObama Foundation Highlights Challenges in Hungary and Poland