Science

Google Unveils FunctionGemma: New AI Model for Mobile Control

Google has launched FunctionGemma, a specialized AI model designed to enhance mobile device functionality through natural language processing. This new model, which boasts 270 million parameters, addresses a critical issue in modern application development: reliability at the edge. Unlike traditional chatbots, FunctionGemma can convert user commands into executable code without requiring cloud connectivity, marking a significant shift in Google’s strategy within the AI landscape.

Strategic Shift to Local AI Solutions

The introduction of FunctionGemma represents a strategic pivot for Google DeepMind and the Google AI Developers team. As the industry focuses on expanding large-scale models in the cloud, FunctionGemma emphasizes the potential of Small Language Models (SLMs) that operate locally on devices, including smartphones, browsers, and IoT gadgets. This model provides a “privacy-first” solution that allows for complex logic processing on-device, minimizing latency and improving user experience.

FunctionGemma is available for immediate download on platforms such as Hugging Face and Kaggle. Users can also explore its capabilities through the Google AI Edge Gallery app, downloadable from the Google Play Store.

Performance Highlights and Developer Tools

FunctionGemma specifically targets the “execution gap” prevalent in generative AI. While larger language models excel in conversational tasks, they often fall short in performing reliable software actions, particularly on devices with limited resources. According to internal evaluations from Google’s “Mobile Actions,” conventional small models achieve only a 58% baseline accuracy in function calling tasks. In contrast, FunctionGemma’s accuracy rises to 85% when fine-tuned for its designated purpose, showcasing its ability to perform complex tasks like identifying specific grid coordinates for gaming mechanics.

Google is not just providing the model; developers will receive a comprehensive toolkit that includes:

- The Model: A 270 million parameter transformer trained on 6 trillion tokens.

- Training Data: A specialized “Mobile Actions” dataset for developing custom agents.

- Ecosystem Support: Compatibility with various libraries including Hugging Face Transformers, Keras, and NVIDIA NeMo.

Omar Sanseviero, the Developer Experience Lead at Hugging Face, emphasized the model’s adaptability on social media, stating that it is “designed to be specialized for your own tasks” and can operate across multiple devices.

The local-first design approach offers several advantages: it enhances user privacy by keeping personal data on-device, reduces latency with instantaneous actions, and eliminates the costs associated with token-based API fees for simple user interactions.

Implications for Enterprises and Developers

For enterprise developers, FunctionGemma encourages a departure from traditional monolithic AI systems towards more efficient, compound systems. By deploying FunctionGemma as an intelligent “traffic controller” directly on user devices, companies can significantly cut down on cloud inference costs while maintaining high responsiveness.

FunctionGemma can serve as the first line of defense, handling common commands quickly while routing more complex inquiries to larger cloud models when necessary. This hybrid approach not only reduces costs but also enhances performance, particularly in environments requiring high reliability.

Moreover, the model ensures deterministic reliability, which is crucial for applications in sectors such as finance and healthcare. The model’s composition allows for fine-tuning based on domain-specific data, enabling it to function predictably in production.

Privacy compliance is another key consideration. FunctionGemma’s efficiency allows it to operate entirely on-device, mitigating the risks associated with transmitting sensitive data to the cloud.

Licensing and Use Considerations

FunctionGemma is released under Google’s proprietary Gemma Terms of Use. While described as an “open model,” it does not strictly adhere to the Open Source Initiative’s definition of open source. The licensing structure permits free commercial use, modification, and redistribution, but it also includes specific restrictions on usage, including prohibitions against generating harmful content.

For most developers and startups, the license enables the creation of commercial products. However, those working on dual-use technologies or seeking strict open-source freedom should carefully review the stipulations regarding harmful use and attribution requirements.

As Google continues to innovate in the AI space, FunctionGemma’s release underscores a growing trend towards localized AI solutions that prioritize user privacy, efficiency, and reliability.

-

Lifestyle1 week ago

Lifestyle1 week agoSend Holiday Parcels for £1.99 with New Comparison Service

-

Science2 months ago

Science2 months agoUniversity of Hawaiʻi Leads $25M AI Project to Monitor Natural Disasters

-

Science2 months ago

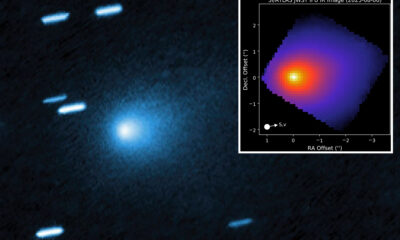

Science2 months agoInterstellar Object 3I/ATLAS Emits Unique Metal Alloy, Says Scientist

-

Science2 months ago

Science2 months agoResearchers Achieve Fastest Genome Sequencing in Under Four Hours

-

Business2 months ago

Business2 months agoIconic Sand Dollar Social Club Listed for $3 Million in Folly Beach

-

Politics2 months ago

Politics2 months agoAfghan Refugee Detained by ICE After Asylum Hearing in New York

-

Health2 months ago

Health2 months agoPeptilogics Secures $78 Million to Combat Prosthetic Joint Infections

-

Business2 months ago

Business2 months agoMcEwen Inc. Secures Tartan Lake Gold Mine Through Acquisition

-

Lifestyle2 months ago

Lifestyle2 months agoJump for Good: San Clemente Pier Fundraiser Allows Legal Leaps

-

Science2 months ago

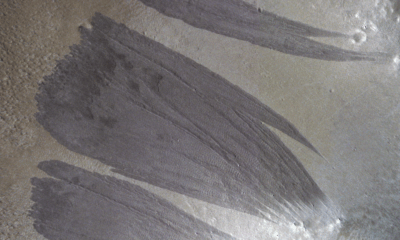

Science2 months agoMars Observed: Detailed Imaging Reveals Dust Avalanche Dynamics

-

Health2 months ago

Health2 months agoResearcher Uncovers Zika Virus Pathway to Placenta Using Nanotubes

-

Entertainment2 months ago

Entertainment2 months agoJennifer Lopez Addresses A-Rod Split in Candid Interview