Science

Researchers Uncover Bias in Key Algorithm Performance Metric

Recent research has called into question the reliability of a prominent tool used to assess algorithm performance, specifically the Normalized Mutual Information (NMI). This metric is widely employed by scientists to evaluate how accurately an algorithm’s output aligns with actual data classifications. However, findings from a study published in October 2023 suggest that NMI may hold inherent biases that can lead to misleading conclusions.

The study, conducted by a team of researchers from various academic institutions, highlights potential pitfalls in the use of NMI. Traditionally, this metric has been regarded as a standard measure for algorithm performance across numerous applications, particularly in fields involving data sorting and classification. Yet, the new analysis indicates that NMI does not consistently provide an accurate representation of an algorithm’s effectiveness.

Concerns About Bias and Misleading Results

Researchers found that NMI can produce skewed results depending on the characteristics of the data being analyzed. For instance, when algorithms deal with imbalanced datasets, the NMI scores can disproportionately favor certain classifications over others, distorting the perceived accuracy of the algorithm. This revelation is significant, as many scholars and practitioners rely on NMI to guide decisions in critical areas such as healthcare, finance, and artificial intelligence.

According to the study, the implications of these biases extend beyond academic circles. “If algorithms are assessed with a flawed metric, the consequences can affect real-world applications,” said Dr. Emily Carter, a lead author of the study and a professor at the University of Technology. “Misleading results in algorithm assessments can lead to poor decision-making in sectors that rely heavily on data-driven insights.”

The research team recommends that the scientific community reassess the use of NMI and explore alternative metrics that may offer a clearer and more unbiased view of algorithm performance. They advocate for further studies to evaluate these alternatives comprehensively.

Implications for the Scientific and Business Communities

The findings raise critical questions about the standards used in algorithm evaluation, particularly given the growing reliance on machine learning and artificial intelligence across various industries. As businesses increasingly implement algorithms to drive decisions, understanding their performance metrics becomes vital.

In light of this research, organizations are encouraged to adopt a more critical approach when assessing algorithm effectiveness. The potential for bias in established metrics like NMI may necessitate a shift toward more robust measurement tools, ensuring that algorithms serve their intended purposes without introducing unintended consequences.

The study has already garnered attention within the scientific community and is expected to spark further discussions on the validity of commonly used performance metrics. As the debate evolves, the research team is hopeful for a broader reevaluation of practices surrounding algorithm assessment, aiming ultimately for more reliable and effective tools in data analysis.

In a rapidly changing digital landscape, staying vigilant about the tools used to evaluate technology is essential. The implications of this research extend far beyond academia and into the daily operations of businesses that depend on accurate data classification and algorithm performance.

-

Science1 month ago

Science1 month agoUniversity of Hawaiʻi Leads $25M AI Project to Monitor Natural Disasters

-

Science2 months ago

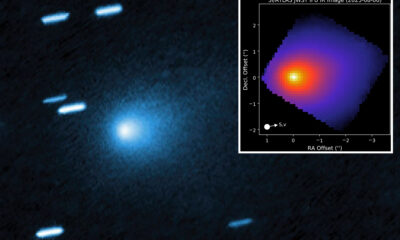

Science2 months agoInterstellar Object 3I/ATLAS Emits Unique Metal Alloy, Says Scientist

-

Lifestyle1 week ago

Lifestyle1 week agoSend Holiday Parcels for £1.99 with New Comparison Service

-

Science2 months ago

Science2 months agoResearchers Achieve Fastest Genome Sequencing in Under Four Hours

-

Business2 months ago

Business2 months agoIconic Sand Dollar Social Club Listed for $3 Million in Folly Beach

-

Politics2 months ago

Politics2 months agoAfghan Refugee Detained by ICE After Asylum Hearing in New York

-

Business2 months ago

Business2 months agoMcEwen Inc. Secures Tartan Lake Gold Mine Through Acquisition

-

Health2 months ago

Health2 months agoPeptilogics Secures $78 Million to Combat Prosthetic Joint Infections

-

Science2 months ago

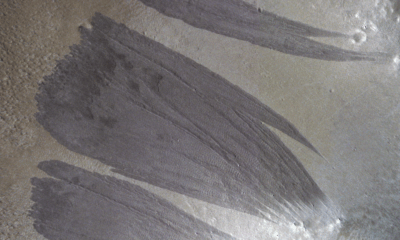

Science2 months agoMars Observed: Detailed Imaging Reveals Dust Avalanche Dynamics

-

Lifestyle2 months ago

Lifestyle2 months agoJump for Good: San Clemente Pier Fundraiser Allows Legal Leaps

-

Health2 months ago

Health2 months agoResearcher Uncovers Zika Virus Pathway to Placenta Using Nanotubes

-

Entertainment2 months ago

Entertainment2 months agoJennifer Lopez Addresses A-Rod Split in Candid Interview