World

Elon Musk’s Changes at X Spark Debate on Social Media Safety

The landscape of social media has come under scrutiny following significant changes implemented by Elon Musk after his acquisition of Twitter, now rebranded as X. Since the purchase in 2022, Musk has reinstated numerous accounts previously banned for promoting hate speech and misinformation, igniting concerns about the platform’s role in shaping political discourse and the integrity of information shared online.

In recent years, the issue of foreign interference via social media has gained prominence. A 2014 Buzzfeed News report revealed the tactics of the Internet Research Agency, a Russian organization that orchestrated a propaganda campaign across various platforms, including Facebook and Twitter. Despite nearly two decades of discussion surrounding foreign troll operations, the United States continues to grapple with the extent and impact of these activities.

Following Musk’s takeover, a 2024 CNN analysis highlighted troubling patterns among pro-Trump accounts on X. The investigation found that 56 such accounts exhibited “a systematic pattern of inauthentic behavior,” with some even displaying verified blue check marks. Eight of these accounts used stolen images of prominent European influencers to gain credibility. Musk’s actions have led to a significant reduction in the safeguards against misinformation, raising alarms among users and experts alike.

Under Musk’s leadership, X has dismantled essential teams that once worked to combat the spread of falsehoods. The platform’s monetization strategy relies heavily on user engagement, often fueled by controversial topics and cultural disputes. This dynamic has created a feedback loop that not only boosts engagement but also complicates the political landscape, particularly as factions within the Trump base begin to fracture.

Concerns About Safety and Accountability

In October, Nikita Bier, X’s head of product, proposed a new feature intended to expose the geographic locations of users, in response to concerns about foreign bots. This initiative gained traction after conservative commentator Katie Pavlich called on Musk to address the issue publicly. Following a promise from Bier to act within 72 hours, the feature was rolled out, albeit with significant flaws.

Despite initial praise from prominent conservatives, including Florida Governor Ron DeSantis, the rollout faced criticism for its potential risks to user safety. The feature inadvertently revealed the operating locations of several prominent MAGA accounts, some identified as being based in Nigeria. Users of the platform, many of whom disguise their identities to amplify their reach, are able to exploit these systems effectively.

Worries extend beyond the technical flaws of the new feature. Journalists, particularly those covering authoritarian regimes, face heightened risks when their locations are inaccurately displayed. The potential for exposure without proper safeguards poses a substantial threat to their safety and integrity.

The Broader Impact on Social Media

X is not alone in its struggles with safety and misinformation. Recent court filings from Meta, the parent company of Instagram, revealed a concerning “17x” strike policy for accounts involved in human trafficking. This policy allowed accounts to violate platform rules multiple times before facing suspension, raising serious questions about the effectiveness of content moderation practices.

Furthermore, internal studies conducted by Meta reportedly identified links between social media use and mental health issues, including increased anxiety and depression among users. One notable study, dubbed “Project Mercury,” indicated that users who temporarily deactivated their accounts experienced improved mental well-being. Despite this knowledge, it appears the company prioritized revenue over user safety, delaying potential solutions.

The current dynamics within social media platforms highlight a fundamental conflict between engagement-driven growth and the need for transparency and safety. As long as platforms like X prioritize user engagement, particularly through controversial content, the risk of misinformation and harmful behaviors will remain high. The call for improved content moderation and transparency will require not only operational changes but also comprehensive regulatory reforms to ensure user protection in an increasingly complex digital environment.

The implications of these developments extend beyond individual platforms, affecting the broader conversation about the role of social media in contemporary society. As incidents unfold, it becomes clear that the need for systemic change is urgent and necessary to safeguard the integrity of information and the safety of users.

-

Lifestyle1 week ago

Lifestyle1 week agoSend Holiday Parcels for £1.99 with New Comparison Service

-

Science2 months ago

Science2 months agoUniversity of Hawaiʻi Leads $25M AI Project to Monitor Natural Disasters

-

Science2 months ago

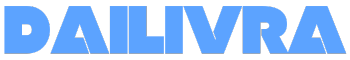

Science2 months agoInterstellar Object 3I/ATLAS Emits Unique Metal Alloy, Says Scientist

-

Science2 months ago

Science2 months agoResearchers Achieve Fastest Genome Sequencing in Under Four Hours

-

Business2 months ago

Business2 months agoIconic Sand Dollar Social Club Listed for $3 Million in Folly Beach

-

Politics2 months ago

Politics2 months agoAfghan Refugee Detained by ICE After Asylum Hearing in New York

-

Business2 months ago

Business2 months agoMcEwen Inc. Secures Tartan Lake Gold Mine Through Acquisition

-

Health2 months ago

Health2 months agoPeptilogics Secures $78 Million to Combat Prosthetic Joint Infections

-

Lifestyle2 months ago

Lifestyle2 months agoJump for Good: San Clemente Pier Fundraiser Allows Legal Leaps

-

Science2 months ago

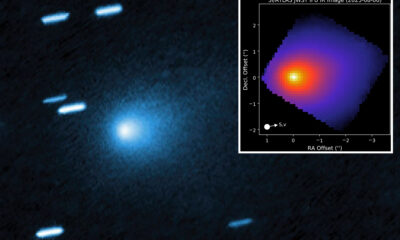

Science2 months agoMars Observed: Detailed Imaging Reveals Dust Avalanche Dynamics

-

Health2 months ago

Health2 months agoResearcher Uncovers Zika Virus Pathway to Placenta Using Nanotubes

-

Entertainment2 months ago

Entertainment2 months agoJennifer Lopez Addresses A-Rod Split in Candid Interview